Project Overview

Neptune Financial Inc.

NepFin is a next-generation financial services company whose commercial lending platform provides growth capital to mid-sized American businesses.

NepFin aims to use cutting-edge technology to empower the B2B lending market, which has been virtually untouched by innovation.

Investors include Third Point Ventures and Sands Capital.

Joining as one of the first engineers, I was responsible for establishing a scalable engineering infrastructure that could easily integrate a rapidly growing team. I played a significant role in setting up a Microservices architecture that supported NepFin's many different technological endeavors. In addition, I drafted a number of technical documents that details both backend and frontend best practices for engineers to follow.

I took lead of NepFin's data collection efforts, collectively codenamed Sonar. I designed and developed a Serverless architecture that supported frequent, large-scale web scraping across millions of pages. The scraped data is then piped through an ETL process to our data science team, to be transformed into structured data that is used by both the Business Development and Credit teams.

With my expertise in frontend development and applied web typography, I contributed to design, and lead the development of many of NepFin's frontend interfaces -- including DealWatch and NepFin's homepage.

Sonar - Web Scraping At Scale

The concept behind Sonar was to collect as much data as possible about financial institutions that operate in the middle market. Our goal was to build the knowledge graph of the middle market -- these fields included the companies' description, insitution type, locations, number of employees, news relating to the insitution, and the details of their transactions. There were other similar aggregations that existed, such as Pitchbook, Crunchbase, and CB Insights, but none had the depth of information we desired. We wanted to build something better.

There were a number of discussions on how to collect this information between the engineering and product team. As we investigated the problem, we realized the majority of the financial instituiions listed the desired information on their websites. We decided on crawling the website of every financial instituion that matched our criteria, and then structured the data collected from those websites through machine learning.

I took lead of this project and developed the crawler. The crawler works by scraping the company's root domain URL, and then finds all links on the page that link to the same domain. The crawler then repeats this algorithm recurssivesly, until there are no links / new pages left. This is a approach very similar to Googlebot, Google's web scraper that it uses to index sites on its search engine.

The infrasturcture to support scraping at scale was its own challenge. The first iteration of Sonar scraped websites asynchrously via GET requests. However, I quickly realized that this was not a viable method, as content loaded with JavaScript or AJAX requests were completely missed by the GET request. In addition, even with multi-threading, the speed of the scraper started to become an issue as the scale grew larger.

The solution that I devised was to implement a Serverless architecture that leveraged AWS Lambda. A cron job that runs daily trigger the start of the scrape job, launching a Lambda for each financial insitution's site. Each of those Lambda's spin up its own instance of Puppeteer -- a headless Chrome browser which is capable of running JavaScript, among other capabilities. The crawling logic is executed inside of Puppeteer, and the resulting data is posted to NepFin's database. Additionally, meta-data about the scrape is logged too -- such as scrape job id, datetime of the start and end of the scrapes, and any errors -- to allow for easy debugging in cases of unexpected results.

The final product is a rapid-fast, large-scale web scraper, with every financial instituion website scraped in parallel with one another. It also resulted in around ~300% lower costs compared to the previous iteration -- thanks to AWS Lambda being quite affordable.

Structuring Unstructured Data

With the HTML data of each financial instituion collected, the next challenge was transforming the unstructured HTML code into structured data. With a small team of 4, I led the design and development of an ETL data processing pipeline to structure the raw data.

One of the first questions we asked ourselves was, "Which aspects of the data processing can be solved algorithmatically (with machine learning or a rules-based approach), and which were better suited for manual human intelligence?" For example, it was relatively easy to train a machine learning model to categorize a web page as a Deal Portfolio page, from the HTML. However, we found that it was non-trivial for it to gather the exact details of the listed transactions on the page. Instead, we relied on machine learning mostly for classification, while humans added details to each entry using an interface we designed.

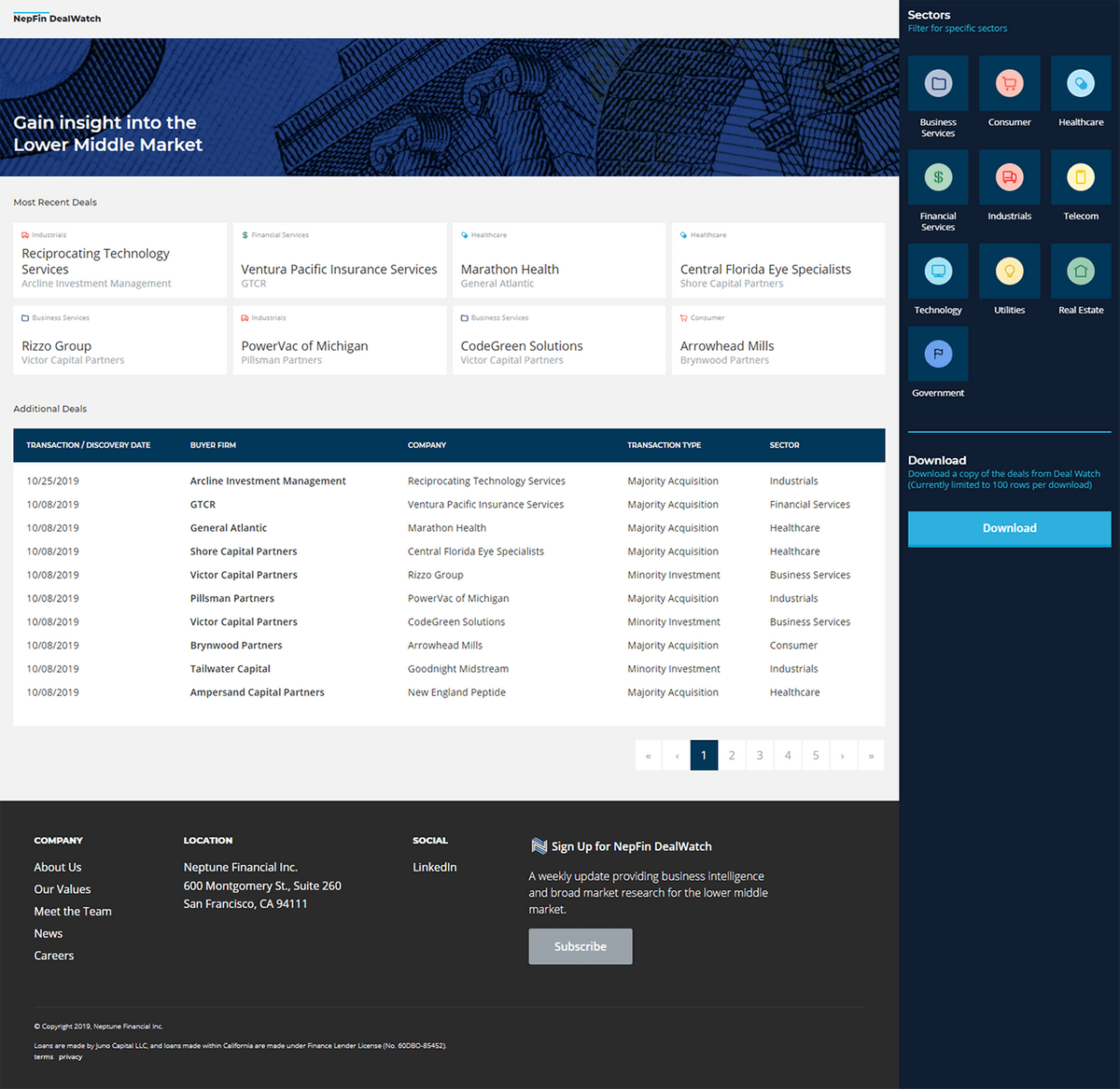

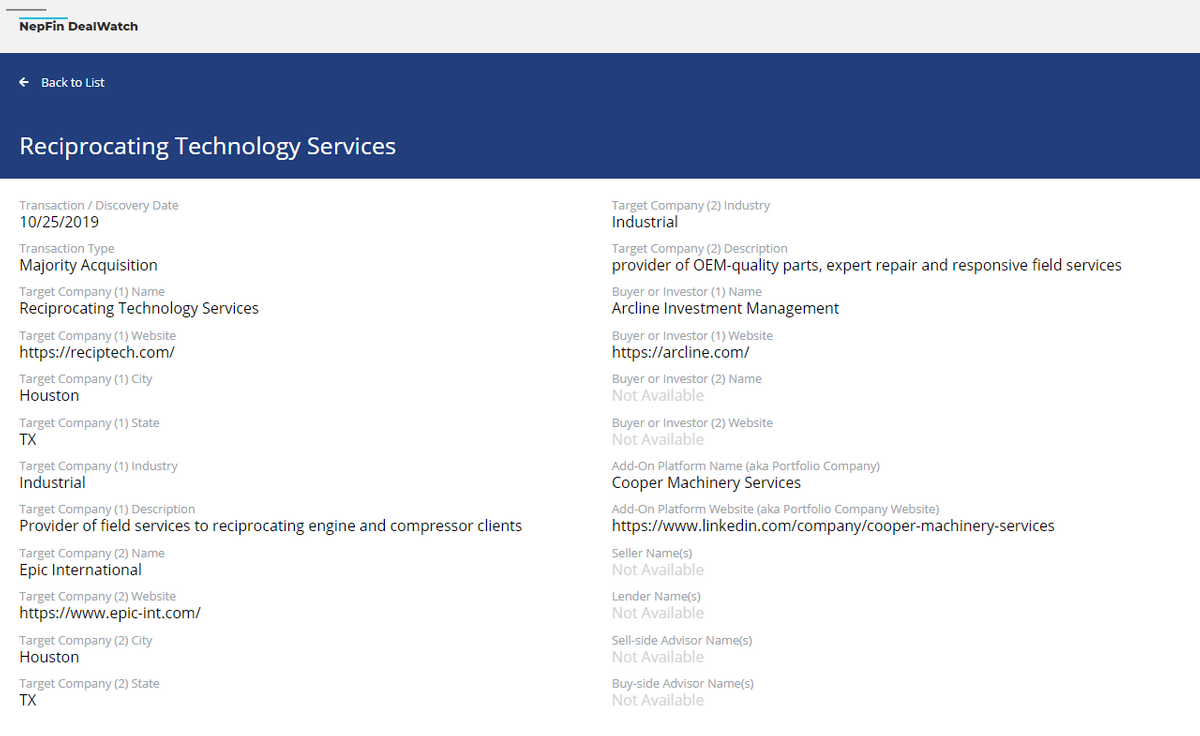

An additional benefit of the flexible infrastructure of the web crawler was that it allowed us to run it daily. When we passed the scraped data of each day through a diff engine, we found that we were actually capturing newly added deals on a near real-time basis. With this data, we launched our first external product: DealWatch -- a public catalogue of transactions in the middle market. As of October 2019, Sonar captured ~60% more deals than other financial data providers in addition to much more context about each transaction.

To promote DealWatch, we started a weekly newsletter that details the most interesting transactions that occured each week in the middle market. As of October 2019, there are currently over 3,000 private equity and investment banking professionals subscribed.

Visit DealWatch Site